See how NTU students from diverse fields experiment with artificial intelligence (AI) to push the boundaries of learning and redefine what is possible

by Tan Zi Jie, Kenny Chee + ChatGPT

IN ART, DESIGN & MEDIA

The big idea

Use AI to co-create a good piece of creative work and see if it can be done ethically – that was Art, Design & Media student Lim Hui Qi’s objective when she set out to complete her project for an AI generative art module.

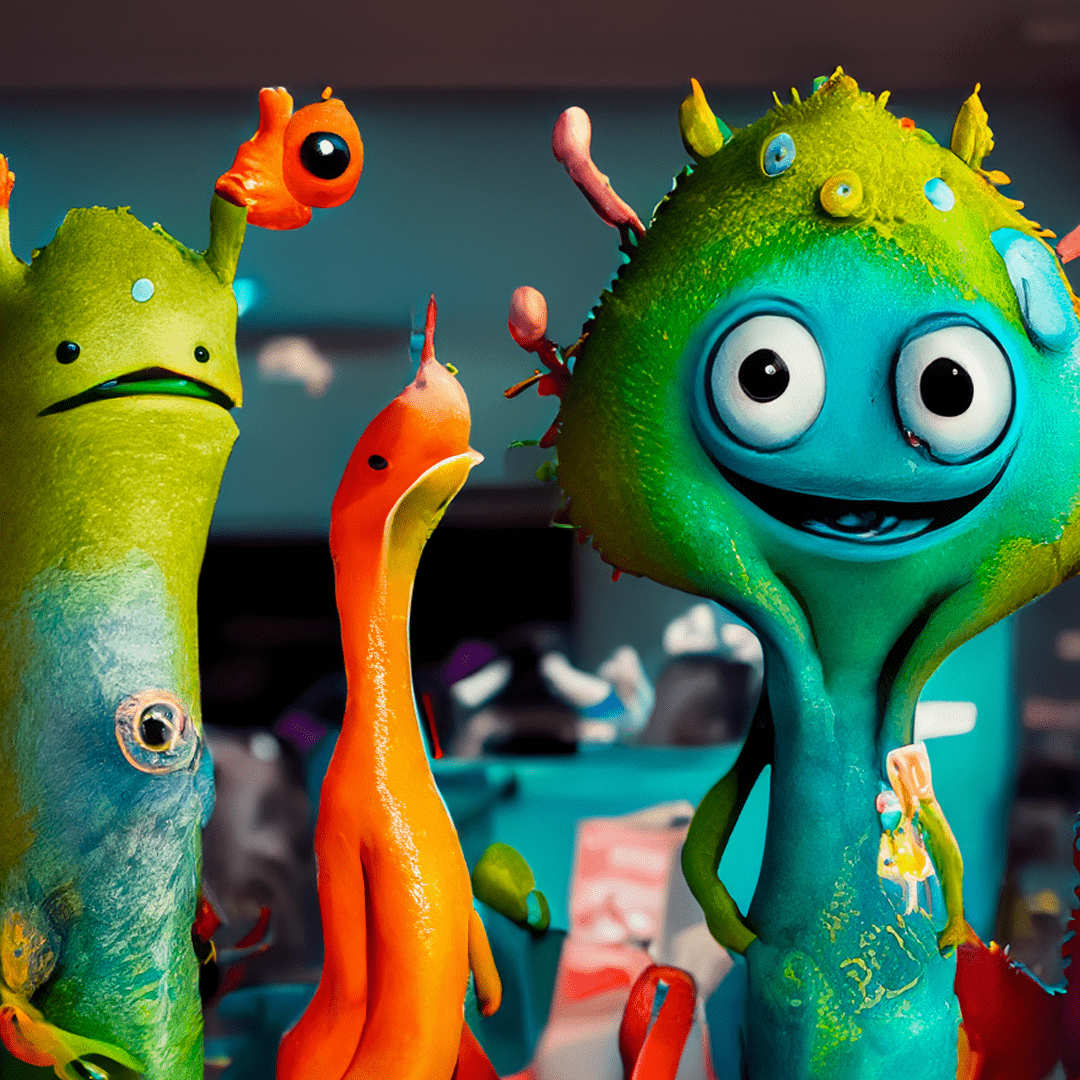

After experimenting for four weeks with four different GenAI visual tools, the third-year student created That Pet Store, an animated short film featuring various creatures – and it was a learning process.

“Most of the scenes in my film could be considered an AI oops,” she laughs.

AI pros & cons

“I had planned for a few possible endings, but when I threw them into AI visual tool Runway Gen-2, none of them worked out the way I envisioned. Instead, the AI came up with a whole new ending, with a nice plot twist.”

Half the time, the software ignored her prompts and would give her something unrecognisable from the reference pictures. “However, the results were still appealing and comical even if they were not what I intended,” she says.

“AI gave me interesting combinations of creatures and colours, which inspired the whimsically coloured pet store theme for my video. I realised these models are good at creating visuals, but bad with maintaining consistency.”

“In the end, I decided to be vague in most of my prompting, so AI could do its thing,” she adds.

“At present, using AI in animation still requires a lot of trial and error. It also requires conventional skills such as photo and video editing, and even coding. These skills are essential to optimise the tools and produce an authentic output.”

– Hui Qi

Food for thought

“Ethical concerns, such as the unauthorised use of artists’ styles and collections, pose a dilemma for me, my course mates and our creative community. Some companies are already using these technologies as a substitute for hiring graphic designers or artists,” says Hui Qi.

She says taking the AI art module has helped her know her “enemy” better. “I had wanted to equip myself with more GenAI skills to boost my employability,” she explains.

“However, for now, I don’t see myself using GenAI for anything beyond a source of inspiration; mainly out of pride in my own abilities and works as an aspiring designer and artist.”

Images: Lim Hui Qi + Runway Gen-2

From left: Hui Qi’s original illustration of a mushroom character and three AI-generated versions that form the basis of her video’s look and feel.

How we used ChatGPT

We ran drafts of each of the stories in this cover feature through ChatGPT, using it to condense, rewrite or edit paragraphs and sentences.

😊: ChatGPT swiftly explained technical concepts and did so accurately as the lines containing them were fact-checked with the real experts.

🤣: For one iteration of the TCM article, it kept associating TCM with magic spells and potions. It was hilarious.

How we used DALL-E

It visualised the students’ AI projects from text prompts.

😊: DALL-E offers an endless array of styles; try “lofi vibes” to get charming, aesthetic visuals.

Kenny

Zi Jie

This story was published in the Jan-Feb 2024 issue of HEY!. To read it and other stories from this issue in print, click here.